Agentic AI & Intelligent Automation for Real Business Systems

We design and build AI-powered systems that work reliably in production. Our approach combines LLM-based intelligence with deterministic logic, strict validation, and operational safeguards.

Production-ready AI systems - not experiments

Start with a free consultationPlumPix AI development core capabilities

LLM-powered

systems

Structured generation, validation, normalization, and decision support using large language models with strong guardrails.

1Agentic AI & workflow

automation

AI-driven agents that orchestrate background jobs, assist users, and automate internal workflows inside real systems.

2AI-ready data

pipelines

Reliable ingestion, normalization, and validation pipelines designed to support AI-powered processing and analytics.

3Key principles

Production-grade

LLM integration

Structured outputs

with strict validation

Deterministic

fallback logic

Asynchronous

background workflows

Monitoring, observability,

and auditability

Predictable AI

cost control

AI services we deliver

AI-powered data

normalization pipelines

Transform unstructured or inconsistent data into reliable, structured formats suitable for search, analytics, and automation.

1Intelligent search

& query understanding

Process complex free-text queries using AI-assisted intent extraction combined with deterministic matching and relevance scoring.

2LLM-assisted

decision support

Copilots and admin tools that help users analyze data, trigger workflows, and make informed decisions — with guardrails and validation in place.

3Background AI

workflows & automation

Queue-based, asynchronous AI processing designed for scale, reliability, and controlled execution.

4AI integration into

existing systems

Safe and predictable LLM integration within APIs, backoffice tools, data pipelines, and internal platforms.

5Our approach to production AI

Strict schemas & output validation

All AI-generated outputs are validated against predefined contracts to ensure consistency and correctness.

Deterministic fallback mechanisms

Non-AI logic guarantees stable system behavior when AI services are unavailable or return low-confidence results.

Asynchronous execution

AI processing runs in background jobs to avoid blocking critical user flows.

Cost & rate control

Controlled request rates, batching, and monitoring keep AI usage predictable and cost-efficient.

Full audit & traceability

AI inputs, outputs, and intermediate states are logged and stored for transparency and future analysis.

We design AI systems so they enhance reliability — not compromise it. AI is never a single point of failure.

Real AI case studies

Open Data Aggregation Service for Entrepreneurs, Legal Entities & Tenders

An open data aggregation service that consolidates multiple public registries into a unified, searchable platform. The system normalizes heterogeneous datasets, preserves data provenance and update history, and enables reliable cross-linking between entrepreneurs, legal entities, and related tenders.

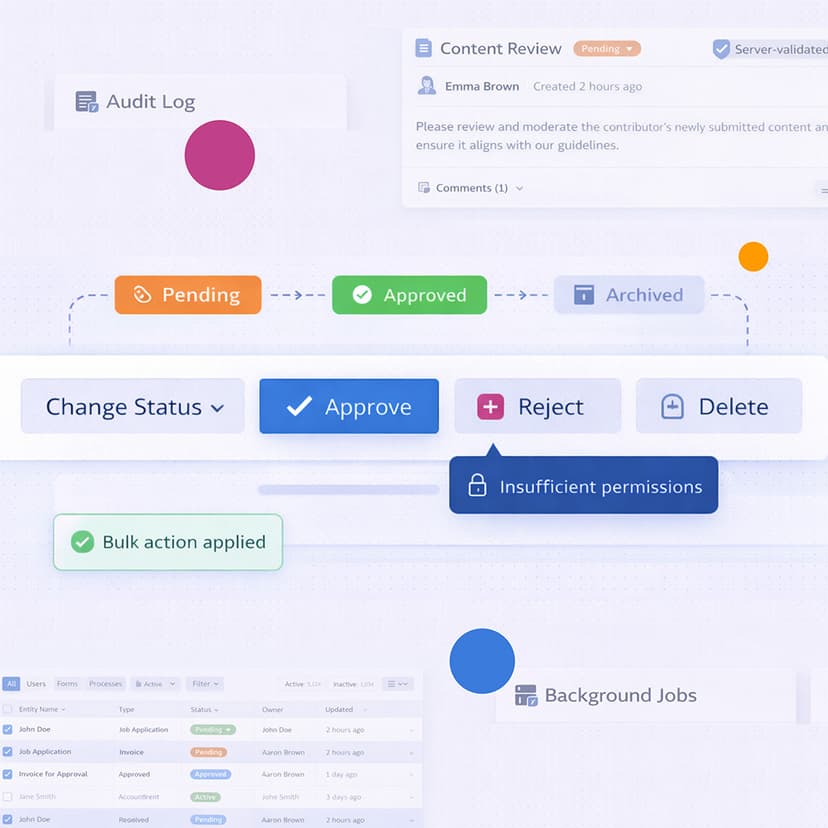

Admin Panels & Backoffice Suite for Internal Operations

A permission-driven admin and backoffice suite built for high-risk internal operations, where control, safety, and auditability are critical. The system enforces business rules server-side, supports role-based workflows, and provides clear visibility into actions, statuses, and execution history.

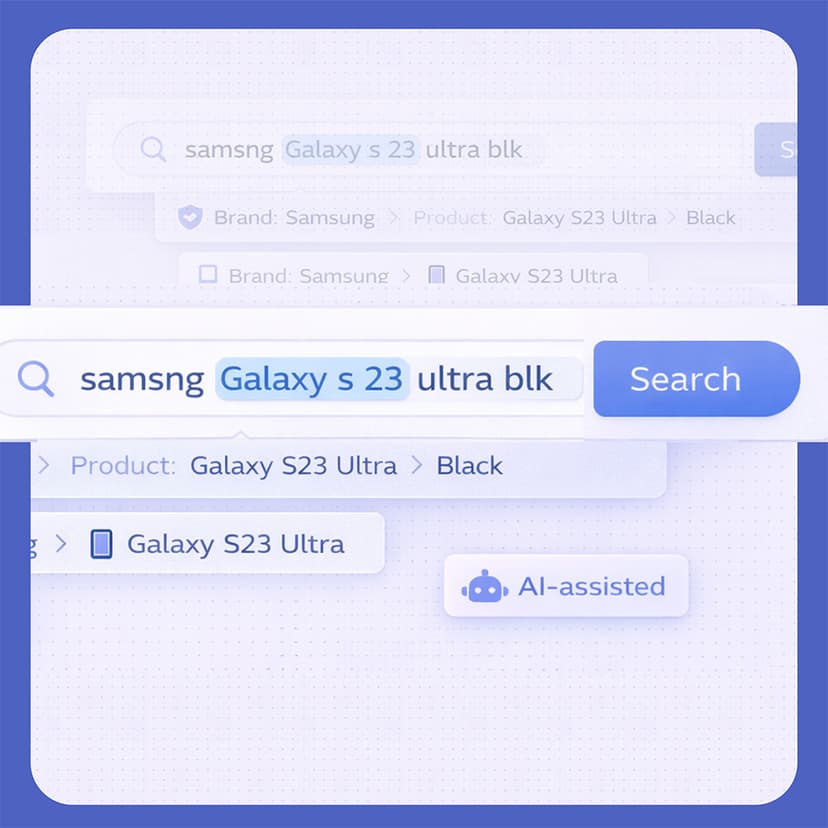

Smart Search System with AI-assisted Query Understanding

A smart search system built for real-world free-text queries, combining deterministic relevance modeling with AI-assisted query understanding. The solution handles typos, fuzzy matches, and mixed-language input while maintaining predictable behavior, stable relevance, and high performance at scale.

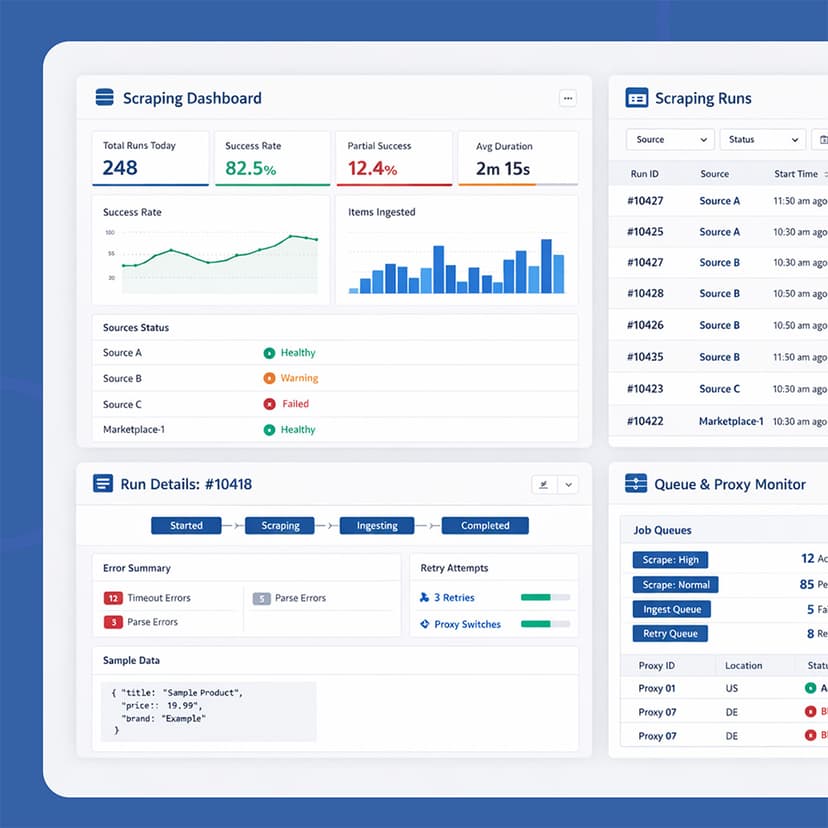

Retail Scraping & Ingestion System for E-commerce Data Collection

The system is designed for reliable extraction and ingestion of e-commerce product and promotional data. It supports multiple pagination strategies, operates through a managed proxy pool, and ensures stable data collection at scale. The goal is to provide consistent, observable, and fault-tolerant retail data pipelines.

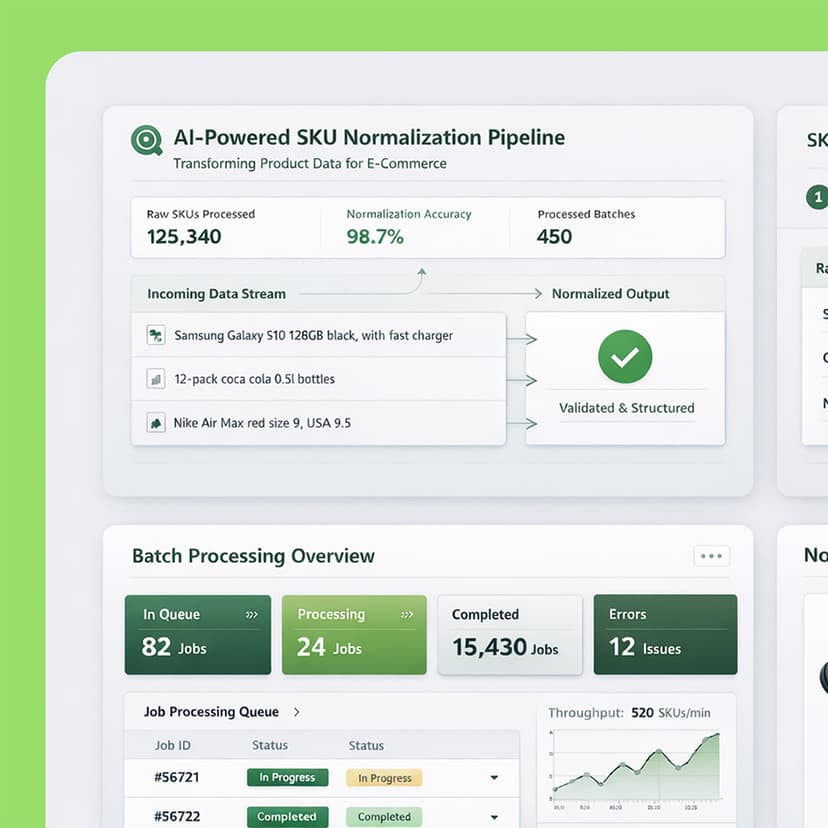

AI-powered SKU Normalization Pipeline for E-commerce Operations

The platform is designed to automatically normalize and standardize product data at scale. It transforms raw, inconsistent SKU titles and attributes into structured, high-quality product information, ensuring consistency across catalogs. The goal is to improve data accuracy, search relevance, and operational efficiency for e-commerce systems.

Build AI you can rely on

Want to integrate AI into real, production systems and not just run experiments? We can help. Let’s discuss your use case.

Iryna

Client Manager

Our AI development process

Discovery & feasibility

We analyze data quality, workflows, constraints, and failure scenarios to determine where AI adds real value — and where it doesn’t.

Architecture & guardrails

AI is designed as part of a larger system: schemas, validation rules, fallbacks, queues, and monitoring are defined upfront.

Implementation & integration

AI components are integrated into existing services, APIs, and workflows with strict output contracts and error handling.

Stabilization & monitoring

We introduce observability, auditing, and cost controls to ensure predictable behavior in production.

Our tech stack includes

React

Next.js

Astro

TypeScript

JavaScript

Vue

Redux

Zustand

Tailwind CSS

Sass/SCSS

Bootstrap

Material UI

WebGL

Three.js

Framer Motion

GSAP

Alpine.js

Laravel

php

Symfony

Node.js

Express.js

Python

FastAPI

Django

REST APIs

GraphQL

Blade / HTML

Laravel Horizon

MySQL

PostgreSQL

Redis

Firestore

MongoDB

Docker

CI/CD

Git

Vercel

Netlify

AWS

GCP

Azure

WordPress

WooCommerce

Magento

OpenAI API

Auth