Open Data Aggregation Service for Entrepreneurs, Legal Entities & Tenders

Product team

1 backend engineer

1 data-focused engineer

QA support during validation phase

Duration: 9 months

Technologies

Laravel

MongoDB

Redis

Blade / HTML

Alpine.js

Laravel Horizon

Problem Overview

Working with public open data related to entrepreneurs, legal entities, and tenders required aggregating information from multiple registries with different data structures, formats, and update cycles. These datasets were fragmented, inconsistent, and difficult to analyze in a unified way.

The absence of a centralized system made it challenging to:

- Search and filter data efficiently across multiple registries

- Cross-link entrepreneurs, legal entities, and related tenders or auctions

- Track data freshness, source attribution, and historical changes

- Adapt quickly to frequent schema and format changes in public registries

Traditional approaches based on rigid relational schemas proved insufficient for handling the variability and continuous evolution of open data sources at scale.

Our Role

We were responsible for the full-cycle design and development of the system — from data model definition and ingestion architecture to storage, search optimization, and administrative interfaces.

Solution

We designed and implemented an open data aggregation service that collects, normalizes, and unifies heterogeneous public data into a single, consistent platform.

The system ingests data from multiple open registries, consolidates it into a unified data model, and enables fast search, filtering, and cross-linking between related entities while preserving data transparency and update history.

Product features

Multi-source open data aggregation

Automated ingestion of registry data for entrepreneurs, legal entities, and tenders/auctions from heterogeneous public sources.

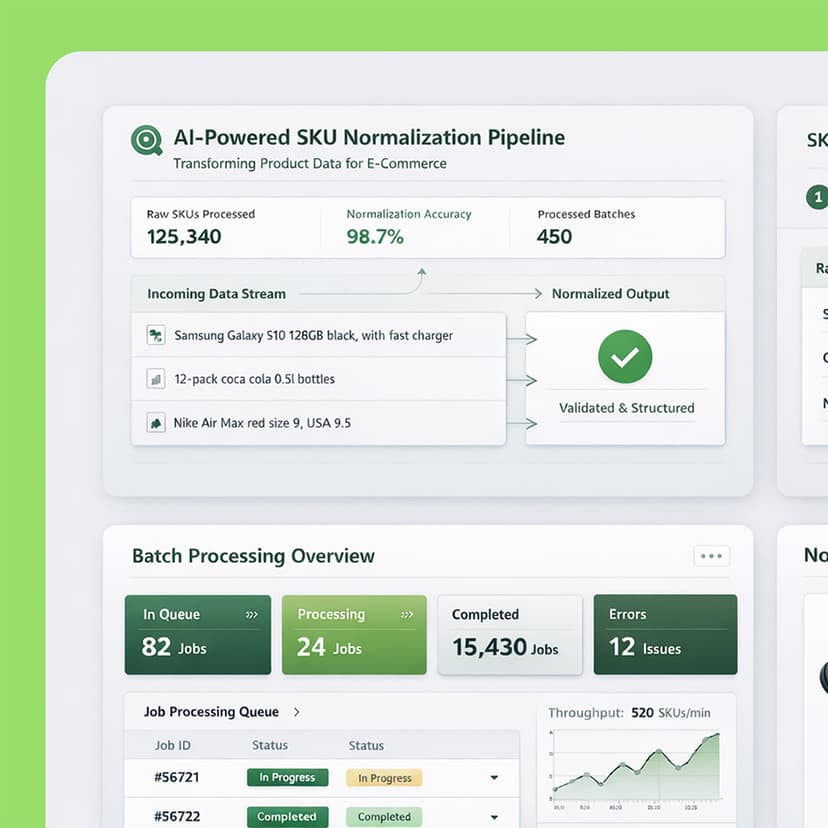

Data normalization & unification

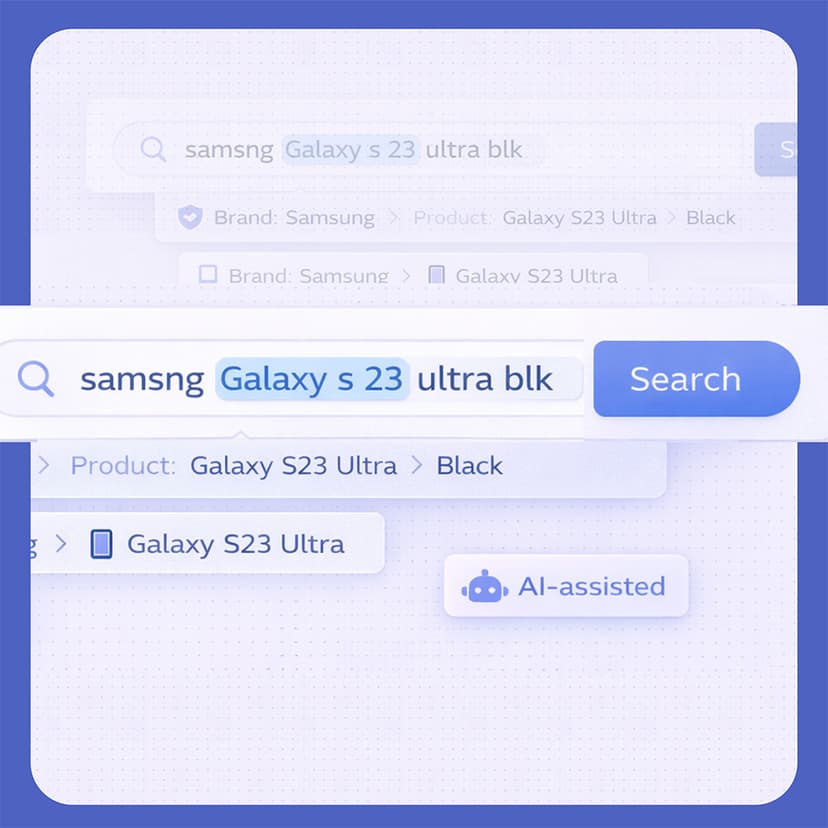

Transformation of inconsistent source structures into a unified data model optimized for search and analytical use cases.

Flexible document-based storage

A document-oriented storage approach using MongoDB to support evolving schemas and diverse registry formats without sacrificing consistency.

Entity profiles & relationships

Consolidated entity profiles with cross-linking between entrepreneurs, legal entities, and related tenders or auctions.

Fast search & filtering

Efficient querying and filtering across large datasets using optimized indexes.

Data freshness & provenance tracking

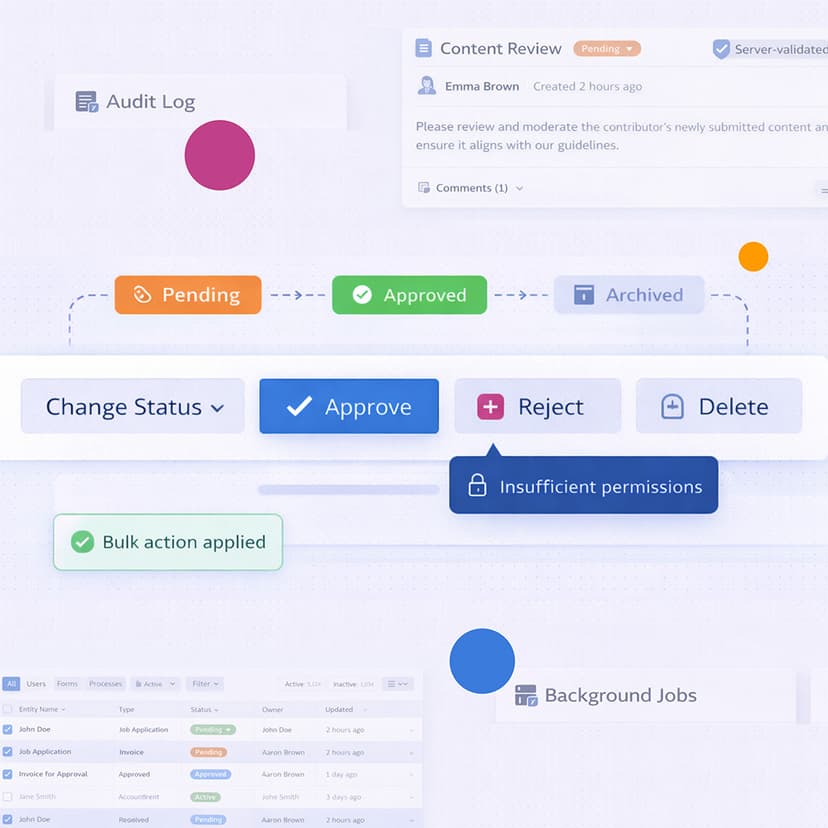

Update timestamps, change logs, and source attribution to ensure transparency and trustworthiness of the aggregated data.

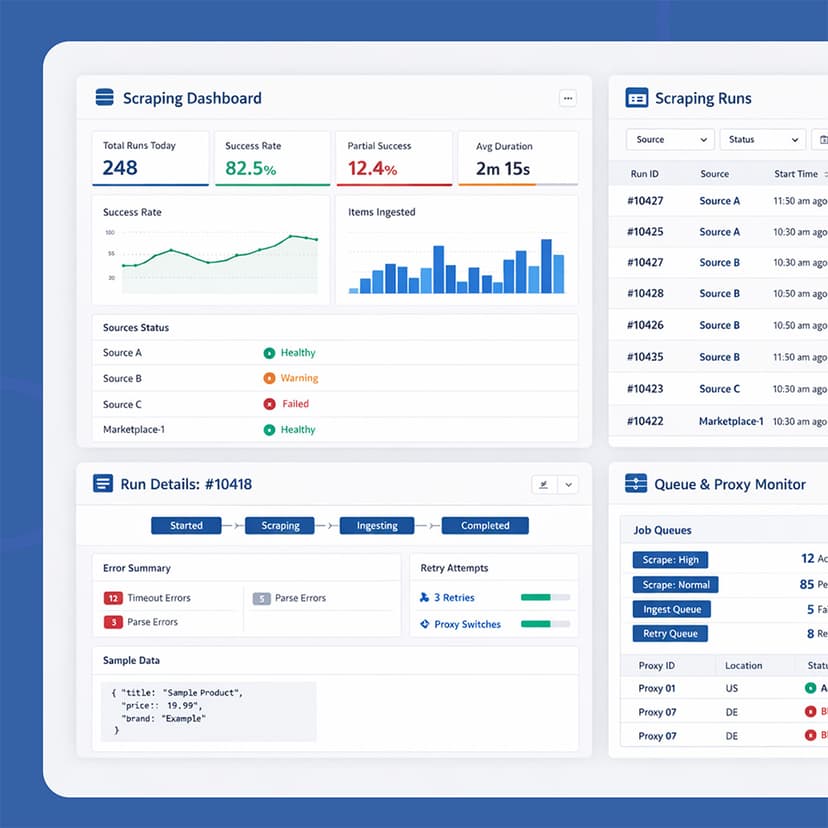

Asynchronous ingestion & updates

Queue-based background workflows enabling independent ingestion, partial updates, and fault isolation across data sources.

Admin-friendly UI

Server-rendered interfaces for browsing, filtering, and inspecting aggregated data without heavy client-side complexity.

1. Source analysis & data mapping

Multiple public registries were analyzed to identify structural differences, update patterns, and data quality issues. A unified data model was designed to balance flexibility with consistency.

2. Ingestion architecture design

A queue-based ingestion pipeline was implemented, allowing independent processing of each source and minimizing the impact of partial failures.

3. Development & normalization

Normalization layers were introduced to unify fields, resolve inconsistencies, and establish relationships between entities across datasets. MongoDB was selected to support document-based modeling and schema evolution.

4. Stabilization & freshness control

Update tracking, change logging, and source attribution mechanisms were added to ensure long-term data reliability and transparency.

Result

The platform became a centralized, scalable foundation for working with public open data, enabling:

- A single source of truth across multiple registries

- Faster and more reliable search and cross-entity analysis

- Reduced manual effort in data reconciliation

- Improved transparency through data provenance and update history

- A flexible architecture ready for onboarding new data sources