Retail Scraping & Ingestion System for E-commerce Data Collection

Product team

1 backend engineer

1 data/scraping engineer

QA support during stabilization phase

Duration: 6 months

Technologies

Laravel

Python

PostgreSQL

Redis

Laravel Horizon

Production Context

Collecting retail product and pricing data at scale is inherently unstable. E-commerce sources frequently change page structures, pagination logic, and loading strategies, while actively limiting automated access. Failures are common, partial results are expected, and data freshness is critical for downstream analytics, pricing, and monitoring systems.

About project

The project is a scalable data extraction and ingestion system designed to reliably collect e-commerce product cards and promotional data from multiple retail sources under unstable conditions.

The platform supports various pagination and loading strategies, isolates failing sources, and operates through a managed proxy pool to ensure high availability and consistent extraction results.

On the backend, the system provides ingestion APIs, execution tracking, and detailed observability, allowing teams to monitor scraping runs, analyze failures, and maintain data quality at scale.

Core Capabilities

Reliable retail data extraction

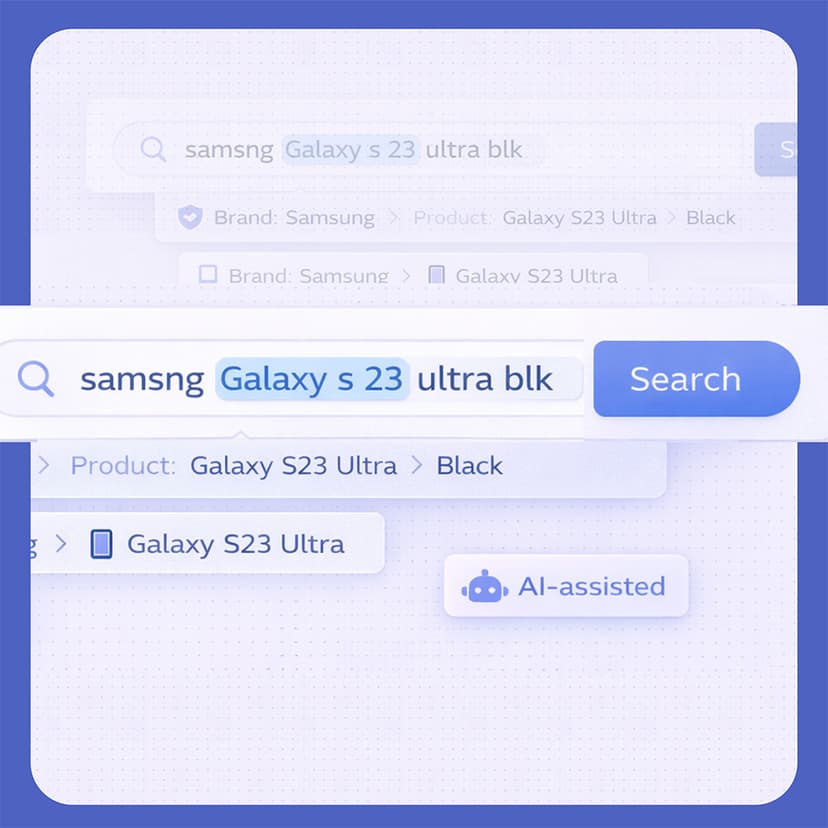

Automated collection of product cards, pricing, and promotional data across multiple e-commerce sources with support for different pagination and loading strategies.

Managed proxy pool

Intelligent proxy rotation, health reporting, and bad-proxy isolation to maximize scrape success rates and minimize blocking.

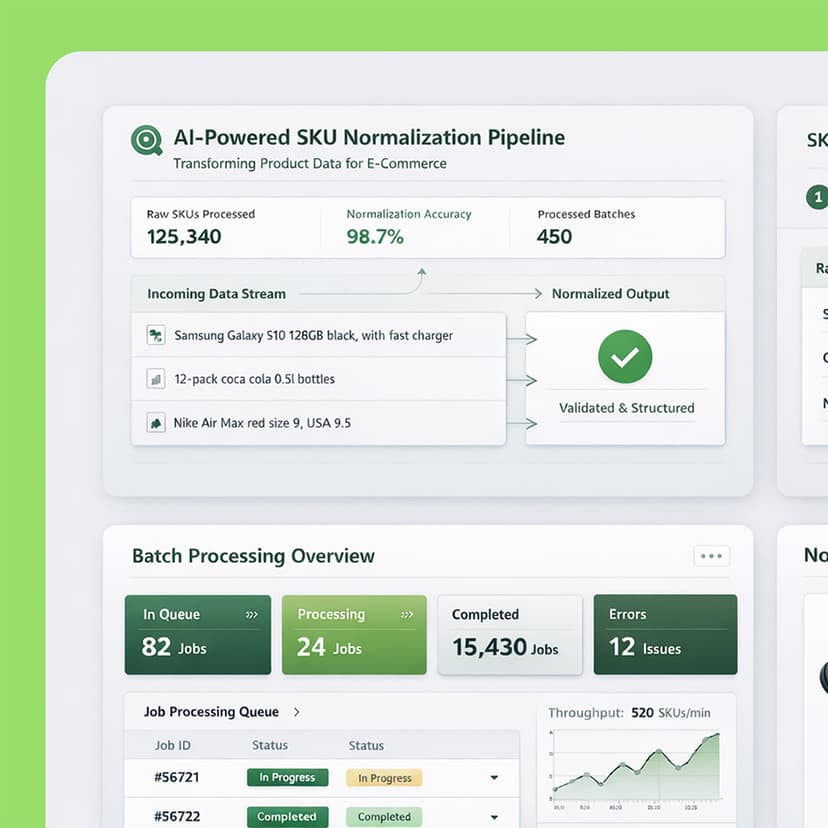

Ingestion APIs

Backend APIs for batch data intake, validation, and normalization before persisting results to storage.

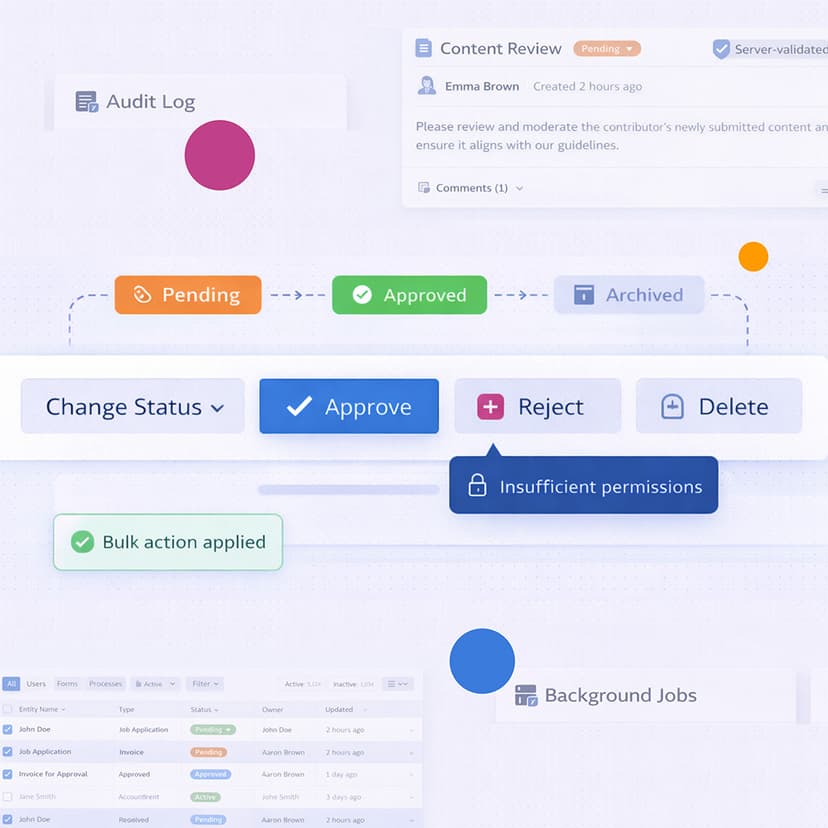

Run tracking & observability

Detailed execution logs including run start/end, duration, metrics, errors, and partial success tracking.

Fault-tolerant processing

Retry strategies, error isolation, and partial-failure handling to ensure long-running jobs remain stable.

Asynchronous background workflows

Queue-driven scraping and ingestion pipelines managed via Horizon for visibility and control.

The Process

Discovery & requirements analysis

We analyzed data source variability, pagination patterns, anti-bot constraints, and expected data volumes. Based on this, we defined reliability goals, proxy usage policies, and ingestion requirements.

Architecture design

A queue-based architecture was designed to decouple scraping, ingestion, and persistence. Special focus was placed on observability, retry control, and isolation of failing sources or proxies.

Development & integration

The backend ingestion layer was implemented in Laravel, while scraping logic was integrated via Python and Playwright. Redis-backed queues were used to orchestrate scraping and ingestion jobs reliably.

Stabilization & monitoring

We introduced detailed logging, metrics, and run tracking to quickly detect failures and optimize performance across data sources.

Result

The resulting system became a stable foundation for large-scale retail data collection across multiple sources.

The platform enabled:

- Continuous ingestion of product and pricing data despite frequent source instability

- Reduced manual intervention through fault isolation and retry control

- Improved visibility into scraping health, failures, and partial results

- Independent scaling of scraping and ingestion workloads

- Faster onboarding of new retail sources with minimal operational overhead

The architecture proved resilient under long-running jobs, high data volumes, and frequent source-level failures.